Let"s have a quick look over the history and development of the HTTP protocol (Hypertext Transfer Protocol), in order to better understand the modifications proposed for the 2.0 version.

HTTP is one of the protocols that have nourished the spectacular evolution of the Internet: it allows the clients to communicate with the servers, which is the base of what the Internet is today. It was initially designed as a simple protocol to ensure the transfer of a file from a server to a client (the 0.9 version, proposed in 1991). Due to the undeniable success of the protocol, billions of devices are able to communicate these days using HTTP (the current version 1.1). The extraordinary diversity of content and of the applications available today, together with the users" requirements for quick interactions push HTTP 1.1 beyond the limits imagined by those who designed it. Consequently, in order to ensure the next leap in the performance of the Internet, a new version of the protocol is required, to solve the current limitations and to allow a new class of applications, of a greater performance.

The latency and the broadband are two features that dictate the performance of the traffic of data on the network:

Latency (one way/ round trip) - the time from the source sending a packet to the destination receiving it (one way) and back (round trip)

Broadband (Bandwidth) - The maximum capacity of a communication channel

As an analogy to the bathing installation of a house, we can consider the broadband as the diameter of a water pipe. A larger diameter allows more water to pass through. On the other hand, in the case where the pipe is empty, no matter its diameter, the water will reach its destination only after going through the entire length of the pipe (the latency).

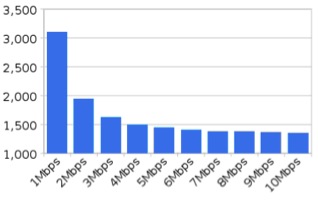

It"s intuitional to judge the performance of a connection according to its broadband (a 10 Mbps connection is better than a 5 Mbps one), but the broadband is not the only factor responsible for performance: in fact, due to the particularity of web applications to use several short duration connections, latency influences performance more than the broadband does.

The conclusion of these observations is that any improvement of the latency of communication has a direct effect on the speed of web applications. If, by improving the protocols, we could reduce the communication necessary between the two ends of the connection, then we would be able to transfer the same data in a shorter time. This is one of the objectives of HTTP 2.0.

In order to understand the limitations of the 1.1 version, it is helpful to have a quick look at the TCP protocol (Transmission Control Protocol), which ensures the transport of data between client and server:

Even though the running of HTTP is not conditioned by TCP as a transport protocol, one of the goals of HTTP 2.0 is the amendment of the standard in order to take advantage of these particularities of the transport level in view of substantially improving the speed perceived by the users of web applications.

One of the aims of HTTP 1.1 was to improve the performance of HTTP. Unfortunately, even though the standard specifies things such as processing parallel requests (request pipelining), the practice has invalidated their implementation due to the impossibility of correct usage. At the moment, most of the browsers implicitly deactivate this option. Consequently, HTTP 1.1 imposes a strict order of the requests sent to a server: a client initiates a request towards the server and he must wait for the answer before initiating another request on the same connection. Thus, an answer of a bigger size may block a connection without allowing the processing of other requests meanwhile. Moreover, the server doesn"t have the possibility to act according to the priorities of the client"s requests.

The developers of web applications have found solutions to avoid these limitations, which are now considered recommended practices for the performance of web applications:

• the majority of browsers open up to six simultaneous connections to the same domain - as an alternative to the actual impossibility to request several paralleled resources on the same connection; we have already mentioned that a page is made on average of 90 resources; the web developers have overbid this facilitation and they distribute content on different domains (domain sharding) in order to force the downloading of as many resources in multiple as possible.

• the files of the same type (Javascript, CSS - Cascading Style Sheets, images) are concatenated in a single resource (resource bundling, image sprites) to avoid the surcharge imposed by HTTP on downloading many small sized resources; some files are included directly in the page source so as to completely avoid a new HTTP request.

Although these methods are considered "good development practices", they derive from the current limitations of the HTTP standard and generate other problems: the usage of several connections and several domains to serve a single page leads to the congestion of networks, useless additional procedures (DNS researches, initiations of TCP connections) and additional overloading of the servers and of the network intermedia (several sockets busy to answer several requests); the concatenation of similar files obstructs their efficient storage on the client (caching) and it is against the modularity of applications. HTTP 2.0 addresses these limitations.

The effort to improve the HTTP standard is tremendous. Taking into account the current wide spread of the protocol, the intention is to bring obvious improvements regarding the above mentioned issues, not to rewrite or substantially change the current specifications.

The main goals of the new version are:

The major change brought by the 2.0 version is the way the content of a HTTP request is conveyed between the server and the client. The content is binary, with the purpose of allowing several parallel requests and answers over the same TCP connection.

The following notions are useful in order to better understand how the pipelining of requests and answers is actually done:

Within a connection there can be an unlimited number of bidirectional streams. The communication within a stream is carried out through messages, which are made of frames. The frames can be delivered in any order and they will be reassembled by the client. This mechanism of decomposing and recomposing of the messages is similar to the one existing at the level of TCP protocol. This is the most important change of HTTP 2.0, since it allows web developers to:

One of the obvious limitations in HTTP 1.1 is the impossibility of the server to send multiple answers for a single request of a client. In the case of a web page, the server knows that besides the HTML content the client will also need Javascript resources, images, etc. Why not completely eliminate the client"s need to request these resources and give the server the possibility to send them as additional answers to the client"s initial request? This is the motivation of the feature called server push. The feature is similar to the one in HTTP 1.1, by including the content of some resources directly in the page sent to the client (inlining). However, the great advantage of the server push method is that it gives the client the possibility to store is cache the received resources, avoiding thus further calls.

In HTTP 1.1, each request made by the client contains all the headers pertaining to the domain of the server. In practice, this adds between 500- 800 bytes to each request. The improvement brought by HTTP 2.0 is that of no longer conveying the headers that do not change (we are counting on the fact that there is only one connection open with the server, so the server can assume that a new request will have the same headers as the preceding one, provided we do not mention otherwise). Furthermore, the entire information contained in the headers is compressed to render it more efficient.

HTTP 2.0 is still a standard on the anvil. Most of its basic ideas were taken from the SPDY protocol initiated by Google. SPDY continues to exist simultaneously with the effort of standardization of HTTP 2.0 in order to offer a ground to try out and validate the experimental ideas. According to the time table announced at the moment, we expect the HTTP 2.0 specification to be final in the autumn of 2014, followed by available implementations.

Based on the extraordinary success of HTTP, the 2.0 version tries to amend the current limitations and to offer mechanisms by which the Internet development can be further sustained.

1. Ilya Grigorik - High Performance Browser Networking [http://chimera.labs.oreilly.com/books/1230000000545/index.html]

2. HTTP Archive [http://www.httparchive.org/index.php]

3. Mike Belshe - More Bandwidth Doesn"t Matter (much) [https://docs.google.com/a/chromium.org/viewer?a=v&pid=sites&srcid=Y2hyb21pdW0ub3JnfGRldnxneDoxMzcyOWI1N2I4YzI3NzE2]

by Ovidiu Mățan